Relevance of automated generated short summaries of scientific abstract: use case scenario in healthcare

Image credit: IEEE

Image credit: IEEE

Abstract

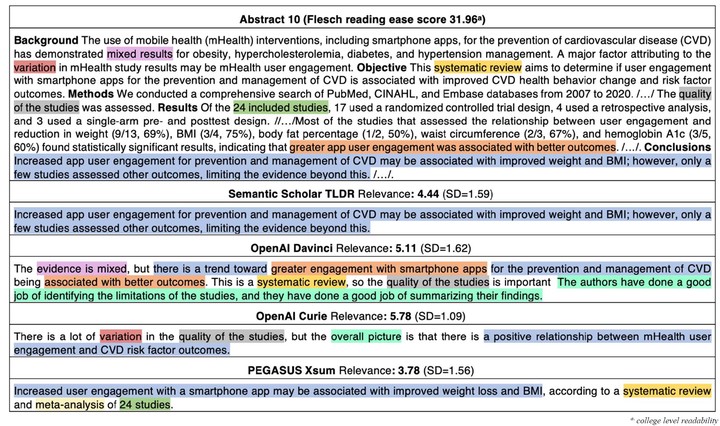

The recent development and successful deployment of large pre-trained natural language models in few-shot and zero-shot scenarios enabled impressive results in different downstream tasks. One such task is abstractive text summarization combining understanding, information compression, and language generation, which might be of great potential in healthcare, where time is a premium. A potential real-world scenario is explored, where the relevance of extremely short summaries from the latest scientific literature is generated by state-of-the-art (SOTA) models and is evaluated by healthcare experts, and can be seen as support at the point of care. In a small-scale study, a baseline fine-tuned model (Semantic Scholar TLDR) was compared with three SOTA models (OpenAI Curie, OpenAI Davinci, and PEGASUS-XSum). Healthcare experts evaluated the abstracts and extremely short summaries considering the scenario and it was observed that in terms of relevance, the OpenAI models and Semantic Scholar TLDR model differed just slightly, i.e. OpenAI Curie model had the highest average score of 4.59 (SD=1.69), followed by with 4.58 (SD=1.58) and OpenAI Davinci with 4.48 (SD=1.87). On the other hand, the PEGASUS-XSum model’s relevance was significantly lower, with 4.01 (SD=1.81). A deeper analysis of selected short summaries has shown that some concepts are difficult to understand for AI models that still have difficulty to “understand”, which often results in uninformative or false facts. One should be aware that extreme summarization using AI-based approaches is still a relatively new field of research and the technology is still not ready to be used in clinical practice. Our small-sample study indicates that it could already support the healthcare experts in the decision-making process.