Local vs. global interpretability of machine learning models in type 2 diabetes mellitus screening

Image credit: Springer

Image credit: Springer

Abstract

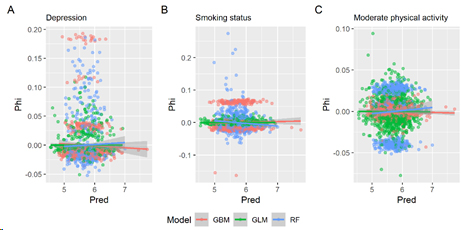

Machine learning based predictive models have been used in different areas of everyday life for decades. However, with the recent availability of big data, new ways emerge on how to interpret the decisions of machine learning models. In addition to global interpretation focusing on the general prediction model decisions, this paper emphasizes the importance of local interpretation of predictions. Local interpretation focuses on specifics of each individual and provides explanations that can lead to a better understanding of the feature contribution in smaller groups of individuals that are often overlooked by the global interpretation techniques. In this paper, three machine learning based prediction models were compared: Gradient Boosting Machine (GBM), Random Forest (RF) and Generalized linear model with regularization (GLM). No significant differences in prediction performance, measured by mean average error, were detected: GLM: 0.573 (0.569 − 0.577); GBM: 0.579 (0.575 − 0.583); RF: 0.579 (0.575 − 0.583). Similar to other studies that used prediction models for screening in type 2 diabetes mellitus, we found a strong contribution of features like age, gender and BMI on the global interpretation level. On the other hand, local interpretation technique discovered some features like depression, smoking status or physical activity that can be influential in specific groups of patients. This study outlines the prospects of using local interpretation techniques to improve the interpretability of prediction models in the era of personalized healthcare. At the same time, we try to warn the users and developers of prediction models that prediction performance should not be the only criteria for model selection.